Are outliers automatically removed in Experience Results or reports?

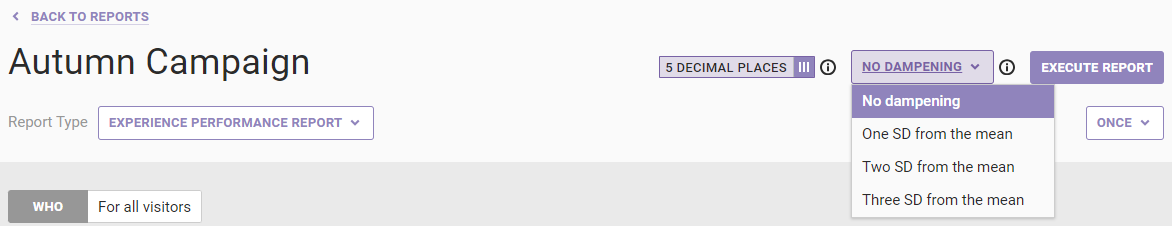

Outliers are not automatically removed. However, you can adjust the dampening settings to change this behavior. Dampening scales purchases in a report that are above the selected threshold to X standard deviations (SD) above the mean, which then eliminates varying degrees of outliers.

Follow these steps to change the dampening setting.

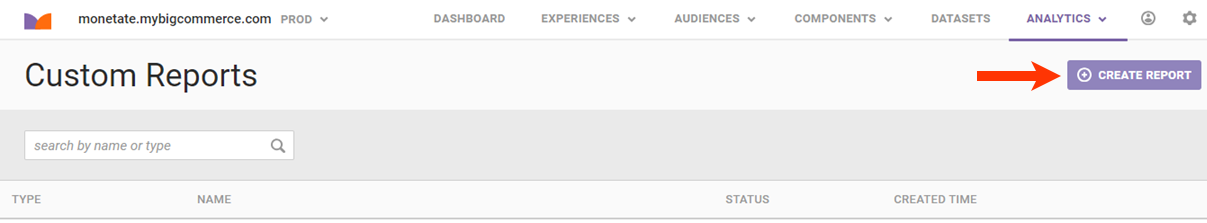

- Click ANALYTICS and then select Custom Reports.

- Click CREATE REPORT.

- Choose an option from the dampening setting selector.

Dampening scales purchases in a report that are above the selected threshold to X standard deviations (SD) above the mean, which then eliminates varying degrees of outliers.

Why does an experience shows confidence for some time periods but not for the overall experience summary?

The platform calculates statistical confidence for the specific time period that you're examining. For example, if you compare metrics from Earliest to date versus Last 7 days, you may find the confidence level and estimated time to confidence may be different. Several factors influence statistical confidence, including the number of sessions and the magnitude of difference between the experiment and control groups. Although a single day can potentially yield confidence of 90% or higher, the experience may have greater or lesser confidence over a longer period of time.

Does running concurrent tests prevent me from drawing accurate conclusions?

Running concurrent tests is a valid approach to website testing. Site visitors are all different, after all. In a laboratory experiment a tester might ask hundreds of questions to weed out sample diversity in advance, but doing this is not possible for online marketers. As a result, visitor diversity requires that a test's sample size be sufficient before declaring statistical confidence. For this reason, running multiple overlapping tests is absolutely valid. The net effect of an additional element of diversity within a sample group is offset by having a sufficient sample size.

How does Monetate test for differences between the experiment and control groups?

Monetate uses standard statistical models to test for differences between experiment and control groups. For mean-based metrics that involve measuring the size of a quantity, the platform uses a two-sided t-test. For proportion-based metrics that involve measuring whether or not a certain action has occurred (for example, the visitor completed an order, or the visitor left the site immediately), Monetate uses the normal approximation to the binomial distribution, also known as the z-statistic.

How often is experience data updated in the reporting suite and user interface?

Data is refreshed nightly for each entry on the Experience Analytics list page. Reporting data is also updated nightly for reports.

If a visitor is placed into one experience split during a Monetate session, will they always be placed into the same split after the session ends?

All site visitors stay in the same split unless they clear their cookies. The Monetate session is used for reporting purposes and does not consider a visitor who begins a new session as a visitor needing to be placed into a new experience.

Does a Purchase Audit Report show purchases visitors made before seeing an experience?

The Purchase Audit Report includes the order number for anyone who purchased and also qualified for the experience at any point in the same Monetate session.

Why did the analytics or reporting numbers change after I ended an experience?

Sometimes you may see a change in the numbers, either in analytics or reporting, after you pause or end an experience. That's because the platform collects data based on the Monetate session, which expires after 30 minutes of inactivity but can persist for as long as 12 hours if a visitor is active at least once every 30 minutes. Therefore, if you pause or end an experience, the platform may continue to collect data for up to 12 hours—or whenever customers' current sessions end.

For example, if a conversion occurs after you pause the experience but the customer who converted was part of a session that started before you clicked pause, this conversion is still included in the analytics and reporting for that experience.

Why does desktop traffic appear for an experience targeting mobile users in reporting?

If you create an experience that only displays for mobile users, it's reasonable to believe that desktop users won't see the experience. This is exactly how experiences work 99% of the time.

So what happens during the 1% (or less) of the time when a desktop user may see a mobile experience?

The desktop/mobile report filter is applied after the fact. This means the desktop or mobile user designation is set when the report is created. To stay as up to date as possible, the definitions of desktop and mobile devices are refreshed every week through a third party. These designations are based on user agents made up of device/browser combinations.

As a result, some of your traffic possibly qualified for a mobile experience with a device that was originally designated as a mobile device but was later designated a desktop device between the time that the customer arrived on your site and the time that you ran the report. While this situation is largely unavoidable due to the ever-changing nature of user agents, the likelihood of this scenario happening across a noticeable portion of your customer base is low.

Why does existing analytics data disappear when I change the experience start time after activation?

Experience analytics are bound from current start time to present. If you change the start date of an experience, it causes data outside of that time range to disappear.

Instead of changing the start time of an existing experience, you should reactivate the experience without editing the start time or duplicate the experience and then set a new start time.

Contact your Services team to recover data if you have edited an experience start time after activation.

How is the Add to cart rate calculated?

The Add to cart rate is measured when a Monetate session passes the addCartRows API method in a trackData call during that session. Passing a mini-cart collect should not impact this metric because once the Add to cart is true for a session, the platform only counts that event once. Analytics shows a percent of sessions where an item was added to the cart.

What do Experience Group 1 and Experience Group 0 in Reports mean?

Experience Group 1 represents experiment split group A, and Experience Group 0 represents the control group. You see these numbers when you run a Revenue Per Session (RPS) Detail Report.